How your AI startup’s legal strategy can help secure enterprise customers

By Adine Mitrani, Fenwick & West LLP, and Mariana Antcheva, Pilot

AI adoption is now mainstream, and that means the buying calculus has changed. Enterprises aren’t asking, “Should we use AI?” but rather, “Which AI will deliver the strongest ROI?” They are now more skeptical of hype, some regulators are watching closely, and investors expect you to secure multi-year deals with recurring revenue.

That means that if you’re building an AI startup, success will hinge in large part on the trust you build with customers and partners. Trust is earned through transparency, execution, and precision—the same qualities that signal enterprise fit, help close deals, and hold up in real workflows.

In this article, co-authored with tech and life sciences law firm Fenwick, we’ll walk through four areas of your legal strategy and AI governance policies that can help: how you frame your solution, how you handle data rights, how you explain your technology, and how you manage risk over time. Startups that handle these areas thoughtfully are well-positioned to close more enterprise deals.

Frame your solution around customer success

Let’s start with the basics: Most enterprise buyers don’t focus too much on the technical details in your model architecture or how many parameters it has. They care about outcomes—solutions that fit seamlessly into existing workflows, deliver measurable ROI quickly, and create persistent value over time.

For example:

- An IT monitoring product that lowers unplanned downtime by 20%

- A support automation tool that reduces average handle time from 10 minutes to 6 minutes

- A fraud detection system that catches anomalies 25% earlier than the customer’s current tools

Those numbers, when backed by pilot studies or case evidence, tell a story that resonates with buyers and unlocks procurement budgets.

Also, a stakeholder’s priorities and risk tolerance may differ:

- A CIO may be hypersensitive to resilience and downtime

- A head of customer service may care about cost per ticket and accuracy

- A compliance officer may be focused on auditability and trade secret leakage

Your framing should flex depending on the buyer. If you use the same pitch deck for everyone, you’re probably missing opportunities.

And be careful with your language. The Federal Trade Commission (FTC) has already made clear that it’s monitoring AI marketing claims. Overpromising is risky. If you say your system “replaces humans” or is “100% accurate,” you could invite both customer skepticism and regulatory scrutiny. Instead, anchor your messaging in verifiable results. You can still be bold—but be bold with demonstrable facts.

Founder takeaway: Frame outcomes first, tailor your narrative to the buyer persona and their risk tolerance, and substantiate your claims. You’ll move faster through procurement, and you’ll power your customer acquisition flywheel.

Navigate the data rights spectrum

High-quality data yields higher‑performing models, which in turn, produce better customer outcomes. While startups look to use customer data to improve their products, enterprises are tightening controls, frequently adding “no training” provisions that prohibit providers from using customer data to train or refine models.

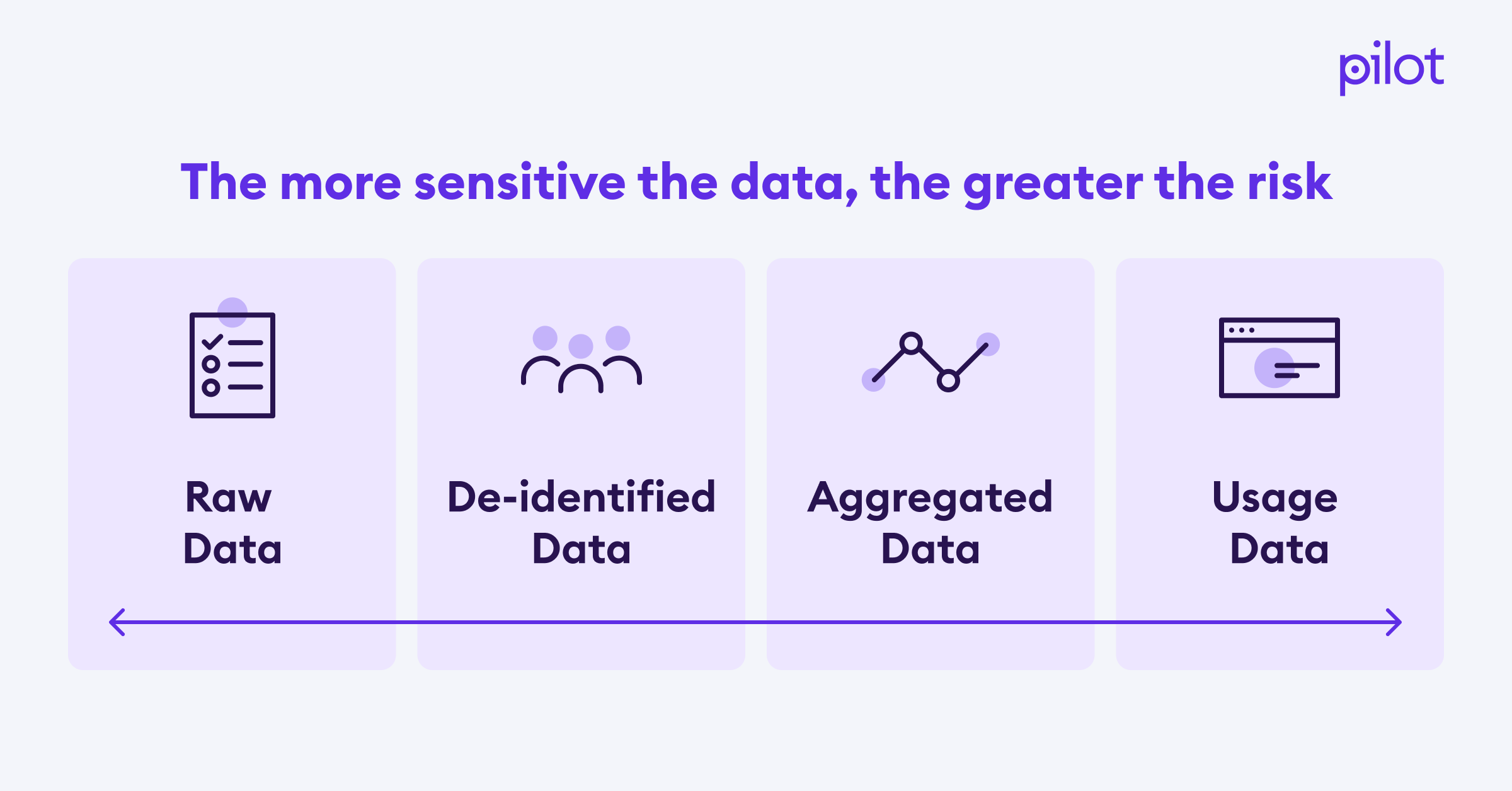

The solution isn’t necessarily to push harder—it’s to navigate the data rights spectrum with care, depending on your actual and planned use cases.

At the lightest end, you have usage metrics and telemetry: logs of system performance, feature usage, and error rates. These are usually non-controversial, and collecting them is standard practice in SaaS. Customers typically accept these practices because they assume it makes for a better customer experience. Note that some AI provider contracts define “telemetry data” broadly such that the definition sweeps in raw customer data (and, in turn, customers grant the providers broad rights to use it).

Next is benchmarking data—patterns across multiple customers that deliver valuable insights and can inform your model. Use of customer data for benchmarking is often negotiable if you can demonstrate that no single customer can be identified. That can include, for example:

- Setting aggregation thresholds (e.g., insights are only published when data is pooled from at least five customers)

- Running regular re-identification risk assessments

- Showcasing documentation of your de-identification methodology

One step further is de-identified customer data, which is more sensitive because it begins as customer-specific. Here, many customers will expect robust technical and procedural safeguards, such as multi-step anonymization (e.g., removing personal identifiers such as names and titles, removing customer identifiers such as brand and address, obfuscating identification and account numbers, etc.), secure storage, data retention limits, audits, and clear communication about your processes. The value proposition is that everyone benefits when the ecosystem improves—but you must be cautious with your wording. Claims of “fully anonymized” and the like can trigger regulatory scrutiny, because some de-identification methods are susceptible to re-identification.

At the far end of the spectrum is raw, unmodified customer data. This is desirable for training, however, it can be difficult to obtain. Many enterprises won’t consider it unless there’s a clear value exchange—discounted pricing or enhanced service tiers. Other enterprises may require you to commit to segregating their customers’ data in a separate model or sandbox or to require on-premises training. As these technical solutions are more complex and take time to build, an increasing number of startups are experimenting with tiered pricing models that align discounts with the level of data sharing a customer is willing to permit.

Ultimately, the key takeaway is don’t just say what you want—be ready to explain why you want it, to demonstrate the protections you’ll take to safeguard information, and, as needed, to remain flexible to a large enterprise’s data sensitivities. Customers are more willing to negotiate when they understand how their data contributions create product improvements that benefit them directly. And in some cases, the customer may be prohibited by regulation or contract from allowing you to train on their data (e.g., the data belongs to your customer’s end users, and the customer does not have contractual rights to allow training on it).

Match explainability to the stakes

Transparency is another area where startups should focus. Some over-engineer it, adding dashboards and audit logs customers don’t use. Others underinvest, offering only surface-level explanations for sensitive systems. The right approach depends on the context.

If your AI is making high-stakes decisions—like credit approvals, healthcare recommendations, or regulatory compliance-related judgment calls—customers will demand maximum transparency. That means explainable models, audit trails, and human oversight. These customers need to justify decisions to regulators and courts, not just to their internal teams.

If your AI is a decision-support tool—say, a system that helps analysts sift through data or suggests options in workflow automation—the bar is often lower. Customers usually want to understand the broad logic and trust the outputs, but they don’t need full interpretability at the code level. The AI tool still needs to work properly, though. There is an ongoing class action in which the plaintiffs allege that a provider of a “human-in-the-loop” AI screening tool acted as an agent in the hiring process, potentially making it liable for the tool’s biased outcomes.

If your AI is an important but non-critical tool—like internal productivity aids or some organizational software—minimal transparency may suffice. In those cases, customers may be happy with basic documentation of how the tool functions, without detailed traceability of every decision.

The principle is straightforward: The more your AI substitutes for human judgment or impacts external stakeholders, the more transparency you need. Don’t treat explainability as a one-size-fits-all feature. Calibrate it to the stakes, and invest your engineering time where it builds the most trust.

Treat risk management as an ongoing discipline

Launching your product or closing that major deal is just the beginning of earning customer trust. You should also continuously monitor your AI systems’ performance.

Good risk management involves:

- Bias and drift detection. Regularly test your models for performance degradation or discriminatory outcomes.

- Periodic audits. These should include both internal reviews and, when possible, third-party assessments.

- Feedback loops. Build channels where customers can quickly flag issues, and have a structured process to resolve them.

Designing and contracting for compliance and IP risk

While the U.S. has been largely reluctant to regulate AI at the federal level, states have produced a patchwork of laws and regulations covering, for example, AI in hiring and interviews, automated decision-making, deepfakes and synthetic media, and consumer-facing disclosures for AI agents and chatbots. Also consider other countries and supranational organizations. The EU AI Act is a comprehensive set of AI-focused rules, and it applies extraterritorially to providers of AI systems that are used in the EU or the output of which is used in the EU. Some countries, such as South Korea, have enacted similar legislation, and we expect more countries to eventually follow. Founders should design their systems with compliance flexibility—logging features, opt-in consent modules, adaptable workflows—so they can pivot as rules evolve.

Further, many customers now expect providers to represent compliance with applicable laws, given the wave of newly enacted and emerging AI regulations. In practice, this could mean that the provider will comply with specific laws targeted at the developers or providers of AI systems (for example, anti-bias and audit requirements for recruiting tools) and privacy laws targeted at data processors, and the customer should comply with specific laws targeted at the deployment of AI systems to end users (e.g., privacy laws for controllers and non-discrimination laws).

Beyond emerging compliance obligations, the law itself remains unsettled—particularly the risks of deploying and using frontier models trained on unlicensed datasets at scale and the liability of infringing on others’ content.

As the provider, include clear use restrictions and contractual protections against submitting infringing inputs and other misuse, and consider what protections the owners of available third‑party LLMs are offering to limit your upstream exposure. On the customer side, adopt AI use policies that govern what users may input and how outputs are used—calibrated to the context of your business and risk tolerance. While there aren’t any settled AI industry norms yet on who bears the risk of IP infringement on these issues, providers and customers are thinking through their exposure and risk appetite, sometimes with interesting outcomes such as a provider offering a pass-through indemnity based on what it is able to recover from the third‑party LLM or a provider procuring AI-specific insurance policies.

Founder takeaway: Build risk management into your operating model. Investors (and potentially regulators and courts) will assess how you monitor and adapt. Be ready to show your process.

Pulling it all together

What does this look like in practice? Picture a mid-stage AI startup selling into healthcare. Their pitch highlights how the tool reduces administrative overhead by 25%, saving nurses two hours per week. They’ve negotiated access to aggregated, de-identified data from multiple hospital systems in compliance with HIPAA, showing how this improves model accuracy without exposing individual records. They’ve invested in explainability features—audit logs and decision rationales—because healthcare is high stakes. And they run quarterly bias audits, publishing summaries that give customers confidence.

Now picture the flip side: A startup touts “revolutionary AI” for fraud detection but offers no proof. It demands sweeping, open-ended training rights with no guardrails. Its model makes opaque calls to freeze bank accounts—locking people out of their own funds—and when customers ask about bias safeguards or recourse, the team waves them off.

Which company do you think gets the enterprise deal? Trust is a key factor—demonstrated with evidence and transparency.

Closing thoughts

For AI founders, growth follows credibility. Success can come from dazzling demos or effective marketing. But lasting success is about framing your solution around measurable outcomes, negotiating data rights responsibly, tailoring transparency to the stakes, and thinking about risk management at every level of your product or service. Done well, it can be the difference between a short‑lived pilot and a steady-state subscription. You’ll also reduce regulatory and legal friction. And you’ll signal to investors that this isn’t just a hype play, but an enduring business.

At Pilot, we handle the financial backbone—bookkeeping, reporting, and compliance—so AI founders can focus on growth with clarity.

Fenwick is a leading law firm, purpose-built to guide visionary tech and life sciences companies and their investors through every stage of growth, from startups securing their first round of funding to leading publicly traded global enterprises. As one of Silicon Valley’s original legal practices, today we have over 600 lawyers, patent agents, engineers, and scientists serving clients all over the world. Named 2024 Practice Group of the Year for both Life Sciences and Technology by Law360, we are consistently ranked a Chambers first-tier firm for delivering the deep experience and technical skill that help innovators at the forefront of their industries shatter boundaries and redefine what’s possible. Visit www.fenwick.com to learn more.